ERAU Industrial Math Project: Quantifying Uncertainties in Image Segmentation

PI Mihhail Berezovski

The Signal Processing and Applied Mathematics research group at the Nevada National Security Site (NNSS) is excited to partner with students at Embry-Riddle Aeronautical University to develop a rigorous statistical method for characterizing the effects of user interaction on supervised image segmentation.

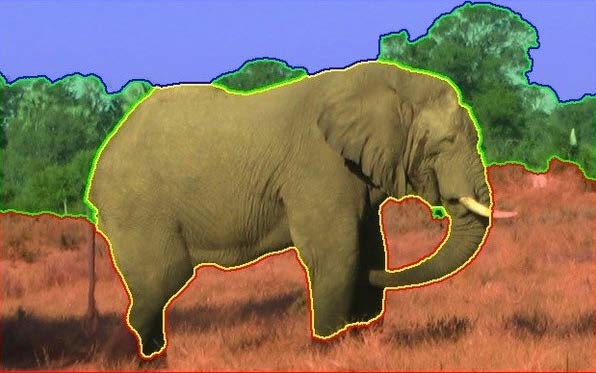

One of the most important methods for understanding dynamic material experiments, like high-explosives driven implosion studies or laser driven shock studies, is to take pictures of some type and perform quantitative analyses of the images. In many cases, what we are most interested in are regions of different materials in an image or regions of the same material in different states. The concept of image segmentation is the process of separating different parts of an image into different categories.

Google Scholar returns about 2.3M results for image segmentation, so there are a lot of ways to do it. Most such ways, however, fall into the category of qualitative image analysis, which means one just wants to recognize whether an object exists in an image or make qualitative statements about the different regions of an image. Scientific analysis of experiments requires quantitative imaging, and it is important to accurately find the boundaries between the different regions, as well as assess the uncertainties associated with the boundaries. In some sense, we would like to draw error bars around the boundary curves. In order to accomplish this, our team at the NNSS has developed a new statistical method for image segmentation, called Locally Adaptive Discriminant Analysis (LADA).

Because this algorithm provides quantitative analysis of material boundary locations based on local information, enough local information must first be provided to be of practical use. Here, the user must select a large portion of the image as training data to aid in the identification of the boundaries. The purpose of informing the algorithm through local information is due to the fact that our images often change in intensity depending on where the material is located in the image,

for example, due to lighting heterogeneity. The issue is that there are different portions of that image with different intensities that correspond to the same material. By selecting training data only locally, the algorithm doesn't get confused by training data of the same material in different regions of the image.

The primary questions that we would like the ERAU team to address are

1. How do the boundaries that are computed by LADA vary, as the user-selected training data are varied? (If two people select different training data, how different are the resulting boundaries?)

2. How does one even quantify the differences between two sets of boundaries computed for the same region?

3. How do the confidence bands that are computed by LADA vary, as the user-selected training data are varied?

4. How does one quantify the differences between two sets of confidence bands for a region's boundary?

5. Can we quantify how boundaries and uncertainties are affected by the sheer amount of training data provided? (Does doubling the amount of training data cut the uncertainties in half?)

Research Dates

02/01/2018 to 09/01/2018